In the rapidly evolving world of artificial intelligence, terms like Generative AI and Large Language Models are often tossed around and used interchangeably. However, this common misconception can lead to confusion and a lack of clarity when discussing AI technologies. In this entry, I will aim to demystify these terms and provide a clear understanding of where they fit within the broader AI domain.

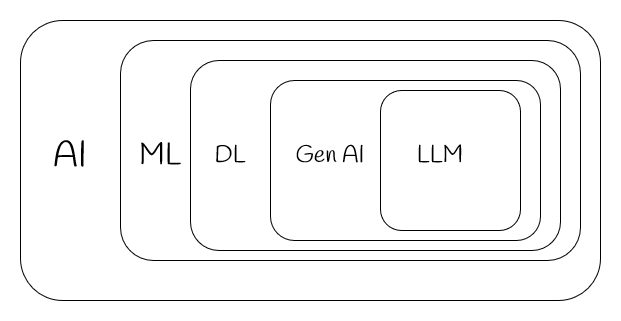

Let’s start at the top. Artificial Intelligence (AI) is the overarching domain that involves creating machines capable of performing tasks that typically require human intelligence. Within AI, Machine Learning (ML) emerges as a significant subdomain, focusing on algorithms and statistical models that enable computers to learn from and make predictions or decisions based on data.

OK, having AI and ML defined it’s time for diving deeper. ML can be divided into various approaches, one of which is Generative Models which is a subsection of Deep Learning (DL). These models are designed to generate new data instances that resemble the training data. This is where Generative AI comes into play, encompassing techniques that allow for the creation of content, be it text, images, or even music, that mirrors human-like creativity.

And finally, we have Large Language Models (LLMs) like GPT-4 or PaLM2, which are a subset of AI models specifically trained on vast amounts of text data. Their primary function is to understand, interpret, and generate human-like text based on the input they receive.

The Intersection and Confusion

The confusion between Generative AI and Large Language Models is understandable. Contemporary products like ChatGPT and rumored offerings like Google Gemini blur the lines by offering capabilities that span both categories. For instance, ChatGPT, a large language model, can generate coherent and contextually relevant images (with incoming plugins to DALL-E 3), showcasing traits of Generative AI capabilities beyond text generation. Similarly, the integration of image analysis or generation in these platforms further intertwines the two concepts.

Generative vs. Discriminative Models

Generative AI vs LLM

In a nutshell, Generative AI encompasses a wide range of models designed to produce or generate content. This content can vary from text and images to sound, music, and even video. Within the realm of Generative AI, Large Language Models (LLMs) hold a specific position. As the name suggests, LLMs specialize in handling and generating text. But there is no problem in Large Language Models, to understand the request for generate an image, or audio, and “ask” specific models to generate those, than include the result into their response. And that’s where it’s getting a bit tricky, isn’t it?