ESRS stands for EMC Secure Remote Support. The main benefit of ESRS is to enable EMC to deliver proactive customer service by identyfying and addressing potential problems before there is an impact to the customer’s business.

So what is ESRS in a nutshell?

- Two-way remote connection between EMC and customer EMC products that enables:

- Remote monitoring

- Remote diagnosis and repair

- Secure, high-speed, and operates 24×7

- Included at no charge for supported products with the Enhaced or Premium Support options

OK, that’s a nutshell. There a three different approaches for installing/configuring ESRS.

ESRS Configurations

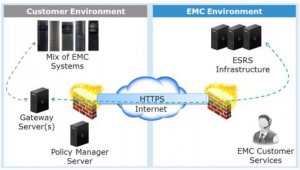

1. ESRS Gateway Client Configuration

Firstly, ESRS Gateway Client configuration. To my knowledge that is the most popular solution, mostly because it’s the most universal solution. It’s appropiriate for customer environment with a heterogeneous mix of EMC products.

For that approach you will need ESRS Gateway and possibly Policy Manager Server. Let me explain what those are.

The ESRS Gateway server provides a single instance of the ESRS application, and also a single point of failure, coordinating remote connectivity for multiple systems. In this scenario, the customer should be prepared to provide one to two Gateway servers. These can be physical servers or VMware instances running Enterprise Red Hat Linux or Windows, and must be dedicated. No additional applications should be run on the Gateway. A second Gateway server is recommended for high availability.

The optional Policy Manager requires customer-provided server, which can be physical or virtual. This can be any server with network connectivity to ESRS. However, it should not utilize the same physical or virtual server as the ESRS Gateway. This server does not need to be dedicated. You can use this application to:

- View and change the policy settings for managed EMC systems

- View and approve pending requests from EMC to access a system

- View and terminate remote access sessions

- Check the audit log for recent remote activity

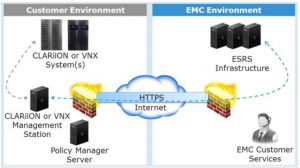

2. ESRS IP Client Configuration

This configuration is appropriate for customer environments that only include CLARiiON or VNX products. Keep in mind that these products also support the ESRS Gateway configuration.

Here, there is no need for a dedicated Gateway. ESRS is installed directly on the CLARiiON or VNX management station, which should be a dedicated customer-supplied physical or virtual server. This server will host the ESRS software as well as other tools and utilities for CLARiiON and VNX products.

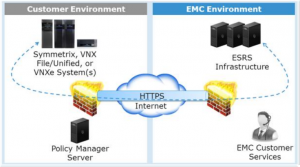

3. ESRS Device Client Configuration

This configuration is appropriate for Customer environments that only include VNXe, VNX File and Unified, as well as Symmetrix products. In this cenario, there is no need for a dedicated server and ESRS is installed directly on the EMC system. Keep in mind that these products also support the ESRS Gateway configuration. Also note that VNX Block products should also have an ESRS Device Client option in place during the second half of 2013.

Just like the other ESRS configurations, best practice includes the optional Policy Manager application on a non-dedicated server to control remote support permissions and record audit logs for all activity.

Policy Manager settings

The customer has the option to pick from four possible scenarios to manage remote access. This includes three policy manager settings:

- always allow

- never allow

- ask for approval

- no policy manager

Ask for approval is the most popular option because it allows the customer to receive notification each time EMC requests access for any remote activity. This option enables the customer to receive notification and evaluate the situation before either agreeing to or denying a remote support session.