In my last few posts I started to give you a brief introduction into NetApp clustered Data ONTAP (also called NetApp cDOT or NetApp c-mode). Now, it’s not that easy task, because I don’t know your background. I often assume that you have some general experience working with NetApp 7-mode (“older” mode or concept of managing NetApp storage arrays). But just in case if you don’t in this post I want to go through basic NetApp concepts of storage architecture.

From physical disk to serving data to customers

The architecture of Data ONTAP enables to create logical data volumes that are dynamically mapped to physical space. Let’s take a look at the very beginning.

Physical disks

We have our physical disks – which are packed into disk shelves. Once those disks shelves are connected to Storage Controller(s), the Storage Controller itself must own the disk. Why is that important? In most cases you don’t want to have a single NetApp array, you want to have a cluster of at least 2 nodes to increase the reliability of your storage solution. If you have two nodes (two storage controllers), you want to have an option to fail-over all operations to one controller, if the second one fails, right ? Right – to do so, all physical disks have to be visible by both nodes (both storage controllers). But – during normal operations (both controllers are working as HA – High Availability Pair), you don’t want one controller to “mess with” the others controller data, since those are working independently. That’s why, once you attach physical disks to your cluster and you want to use those disks, you have to decide which controller (node) owns each physical disk.

Disk types

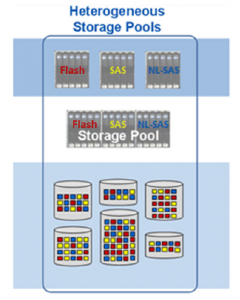

NetApp can work with variety of different disk types. Typically you can devide those disks in terms of use:

- Capacity – this category describes disks that are lower cost and typically bigger in terms of capacity. Those disks are good to store the data, however they are “slow” in terms of performance. Typically you use those disks if you want to store a copy of your data. Disks types such as: BSAS, FSAS, mSATA

- Performance – those are typically SAS or FC-AL disks. However nowadays SAS are the most popular. Those are a little bit more expensive but provides better performance results

- Ultra-performance – those are solid-sate drives disks, SSD. They are the most expensive in terms of price per 1GB, hoewever they give the best performance results.

RAID groups

OK, we have our disks which are owned by our node (storage controller). It’s a good start, but that’s not everything we need to start serving data to our customers. Next step are RAID groups. Long story short RAID group consists of one or mode disks, and as a group it can either increase the performance (for example RAID-0), or increase the security (for example RAID-1).. Or do both of those (for example RAID-4 or RAID-DP). If you haven’t heard about RAID groups before, that might be a little bit confusing right now. For sake of this article think of RAID group as a bunch of disks that creates a structure on which you put data. This structure can survive a disk failure, and increase performance (comparing to working only on single disks). This definition briefly describes RAID-4. This RAID group is often used in NetApp configurations, however the most popular is RAID-DP. The biggest difference is that RAID-DP can survive two disks failure, and still serve data. How is that possible? Well, it’s possible because those groups are using 1 (for RAID-4) or 2 (for RAID-DP) disks as ‘parity disks’. It means those are not storing customer data itself, but they are used to store “control sum” of those data. In other words if you have 10 disks in RAID-4 configuration it means you have a capacity of 9 disks, since 1 disk is used for parity. If you have 10 disks in RAID-DP confiugration it means you have a capacity of 8 disks, since 2 are used for parity.

That’s the end of part 1. In part 2 I will go futher, explaining how to build aggregates, create volumes and serve files and LUNs to our customers.