You can compare FAST Cache as a “DRAM cache” but built from FLASH drives, with higher capacity available. Let’s start at the beginning:

DRAM cache – is a storage-system component that improves performance by transparently storing data in very fast storage media (DRAM). It’s capacity is limited and very expensive.

FAST cache technology supplements the available storage-system cache (DRAM cache), adding up to 2 TB read/write FAST Cache. The nice trick is that FAST Cache addresses a hot spot anywhere in the array, both RAID group LUNs and storage pool LUNs. Hot Spot is simply a busy area on a LUN.

How does FAST cache work?

Data on LUNs that becomes busy is promoted to FAST cache. The promotions depends on the number of accesses (read and/or write) within a 64KB chunk of storage, and is not dependent on whether the data already exists in the DRAM cache. If you have FAST VP I/Os from extreme performance tier are not promoted to FAST Cache.

Let’s assume we have a scenario when FAST Cache memory is empty:

- When the first I/O is sent by the application, the FAST Cache policy engine looks for an entry in the FAST Cache memory map for the I/O’s data chunk. At this stage memory map is empty, the data is accessed from the HDD LUN. This is called FAST Cache miss. EMC claims there is minimal performance overhead when checking the memory map for every access to a FAST cache enabled LUN

- If the application frequently access data in a 64KB chunk of storage, the policy engine copies that cunk from the HDD LUN to FAST Cache. This operation is called promotion, and this period is called the warm-up period for FAST Cache.

- When the application accesses this data again, the policy engine sees that it is in the FAST Cache (based on memory map). This is called a FAST Cache hit. Because the data is accessed from the Flash drives, the application gets very low response times and high IOPS.

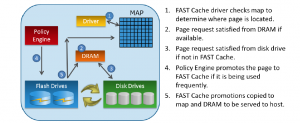

Reads

Incoming I/O from the host application is checked against the FAST Cache memory map to determine whether the I/O is for a chunk t hat is already in FAST Cache. If the chunk is not in a FAST Cache, the I/O request follows the same path it would follow if the storage system does not have FAST Cache. However, if the data chunk is in the FAST Cache, the policy engine redirects the I/O request to the FAST Cache.

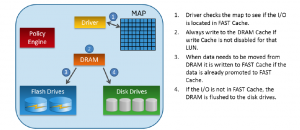

Writes

If the host I/O request is a write operation for a data chunk in FAST Cache, and the write cache is not disabled for the LUN, the DRAM cache is updated with the new “write”, and an acknowledgment is sent back to the host. The host data is not written directly to the FAST Cache. When data needs to be moved out of the DRAM Cache, it is written to FAST Cache.

Write-back operation is the situation when data is copied from FAST Cache to the back-end HDD. This happens when FAST Cache promotion is scheduled but there are no free or clean pages available in the FAST Cache.

Can you verify this for my understanding. Under the heading “Let’s assume we have a scenario when FAST Cache memory is empty:”

1.When the first I/O is sent by the application, the FAST Cache policy engine looks for an entry in the FAST Cache memory map

Is FAST Cache memory map the same as number 2?

2.If the application frequently access data in a 64KB chunk of storage, the policy engine copies that chunk from the HDD LUN to FAST Cache

***the frequentely access data in a 64 KB is copied to FAST Cache Memory Map?***

When you are talking about FAST Cache and Memory Map, are they the same or does FASt Cache live somewhere else?