FAST VP stands for Fully Automated Storage Tiering for Virtual Pools. It’s a very smart solution for dynamically matching storage requirements with changes in the frequency of data access. FAST VP segregates disk drives into three categories (already explained in EMC VNX – RAID groups vs Storage Pools) called tiers:

- Extreme Performance Tier – Flash drives

- Performance Tier – 15k and 10k SAS drives

- Capacity Tier – Near-line SAS drives (that can be up to 4TB in size)

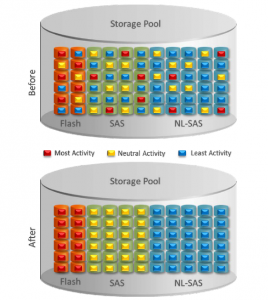

The main feature of FAST VP is reduce Total Cost of Ownership, by maintaining high performance, using mix of expensive and cheaper disks. The main idea is based on the fact, that only part (usually not more than 5%) of the data is accessed frequently. Based on that sentence we can put those “more-active data” located on Heterogenous pools on higher Tier to lower the read response time. Look at the picture below showing before/after.

Tiering policies

FAST VP is automated feature which implements a set of user-defined tiering policies to ensure the best performance for various environments. FAST VP uses an algorithm to make data relocation decisions based on the activity level of each slice. It ranks the order of data relocation across all LUNs within each separate pool. Available LUN level policies are:

- Highest Available Tier – this policy is used when quick response times are a priority. The Highest Available Tier policy starts with the hottest (fastest) slices first and places them in the highest available tier until the tier’s capacity or performance capability limit is hit.

- Auto-Tier – a small portion of a large st of data may be responsible for most of the I/O activity in a system. This policy allows for moving a small percentage of the ‘hot’ data to higher tiers while maintaining the rest of the data in the lower tiers. The Auto-Tier automatically relocates data to the most appropriate tier based on the activity level of each data slice. LUNs set with Highest available Tier take precedence in case of limited capacity of high tier.

- Start High then Auto-Tier – This policy is default for each newly created pool LUN (on heterogenous pools obviously). It takes advantage of the Highest Available Tier and Auto-Tier policies. Start High then Auto-Tier sets the preferred tier for initial data allocation to the highest performing disk drives wich available space. After some time it relocates the LUN’s data based on the performance statistics and the auto-tiering algorithm.

- Lowest Available Tier – this policy is used when cost effectiveness is the highest priority. Data is initially places on the lowest available tier with capacity. It’s perfect for LUNs that are not performance or response-time sensitive. All slices of these LUNs will remain on the lowest storage tier available in the pool, regardless of their activity level.

- No Data Movement – this policy can be chosen only after a LUN is created. Data remains in its current position, so the performance and response-time is predictable. The data can still be relocated within the tier, and the system still collects statistics on these slices, so if you change the policy (for example to Auto-Tier) the Storage Array has all necessary information to move portion of data to appropriate tiers.

Data Relocation

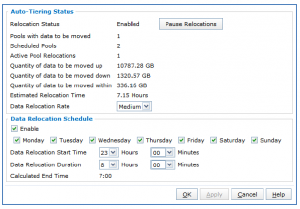

Data relocation is a process that moves the data between the available tiers within the storage pool, accordingly to the chosen tiering policy and collected statistics of LUN’s slices. You can set the Relocation Schedule or manually start the process. The Data Relocation Status can have three values:

- Ready – no active data relocations

- Relocating – data relocations are in progress

- Paused – all data relocations for system are paused.

As I mentioned before FAST VP feature allows for automatic data relocation based on a user-defined relocation schedule. The schedule defines when and how frequently the array starts data relocation on the participating storage pools. The Data Relocation Schedule section enables you to define the operational rate for the data relocation operation. Values are low, medium, high. Low has the least impact on system performance, high has the most impact. The default value is medium.

Using FAST VP for file

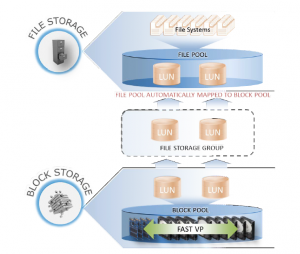

To create filesystems you have to begin by provisioning LUNs from a block storage pool with mixed tiers. Provisioned LUNs are added to the ~filestorage Storage group. After performing a rescan of the storage system a diskmark operation is started that presents the newly created storage to a new file storage pool. If the Block pool LUNS used for creating File Storage Pool were created with FAST VP enabled, the File Systems will use this technology as well. Take a look at the picture below:

Thank u so much.That’s very very useful

Hi,

Thanks for the comprehensive info about FAST-VP. I wonder if we can use ordinary EMC VNX flash drives

instead of purchasing FAST VP-optimized Flash drives in FAST-VP storage pool?

Thank you

In vn series array how does fASTvp manage data in heterogenious storage pool? —